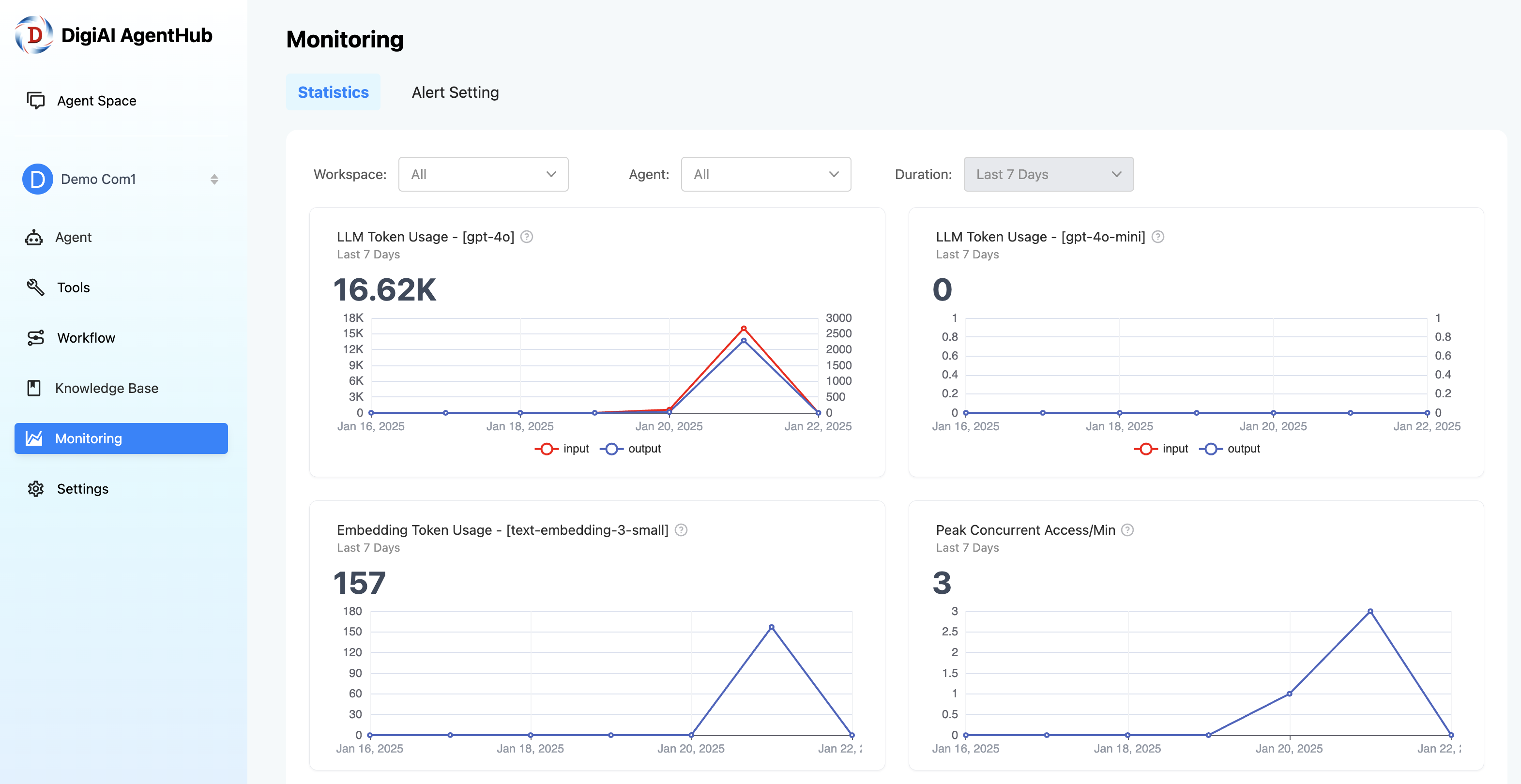

In the Monitoring section, you have the ability to oversee resource usage and assess your agents' effectiveness. The Statistics dashboard provides a robust platform for examining a variety of metrics within the production environment.

Key Metrics for Monitoring:

LLM Token Usage

Keep track of the total number of input and output tokens processed by your Large Language Model (LLM). This includes monitoring the consumption of tokens from your subscribed LLM token add-on. Understanding your token usage can guide necessary adjustments to your subscription to optimize resource allocation.

Embedding Token Usage

Review the total count of embedding tokens used for Knowledge Base. Monitor how these tokens consume your subscribed embedding token add-on. This helps ensure you have the necessary resources for efficient operations and informs decisions regarding potential add-on adjustments.

Peak Concurrent Access

Observe peak concurrent access per minute to assess the maximum number of users interacting with your agent at once. This metric is crucial for determining if your subscription's rate limit add-on needs adjustment, ensuring consistent performance even during high-demand periods.

Total Interactions

This metric captures the average number of continuous interactions during a single user session. For instance, if a user engages in a 10-round Q&A session with the AI, it is recorded as 10 interactions. This data serves as an indicator of user engagement levels.

Total Conversations

Examine the total count of AI conversation sessions. Each new session counts as one, regardless of the number of message exchanges within it. Conversations related to debugging are not included.

Active Users

This figure represents the count of unique users who have engaged in meaningful interactions with the AI, denoted by having multiple question-and-answer interactions.